Summary

- 2025 marked a turning point for artificial intelligence, as excitement around rapid innovation gave way to practical evaluation of cost, performance, and long-term sustainability across the industry.

- AI infrastructure became a central concern, with companies reassessing the financial and operational realities of training and running large-scale models at production level.

- ChatGPT evolved from a novelty into a daily productivity layer, reinforcing the shift from experimental use to dependable, real-world applications.

- OpenAI, Google, DeepMind, and xAI faced intensified scrutiny, not only for technical capability but also for governance, competition, and responsible deployment.

- Business models took priority over pure model breakthroughs, as valuation discussions, including openai current valuation, focused more on revenue paths and efficiency than on scale alone.

- The “all about vibe” moment reshaped expectations, signaling a future where trust, safety, and usability define success more than spectacle or hype.

For much of the past decade, artificial intelligence advanced on a wave of optimism that felt almost unstoppable. Bigger models, faster breakthroughs, and eye-catching demos created the belief that progress would remain exponential indefinitely. In 2025, that momentum met reality. The year did not signal a collapse of AI ambition, but it did introduce a long-overdue correction shaped by cost pressures, trust concerns, regulatory attention, and the practical limits of large-scale deployment.

From ChatGPT settling into the role of a daily productivity layer rather than a miracle machine, to OpenAI, Google, DeepMind, and xAI operating under increased scrutiny, the industry entered a more grounded phase. Questions around governance and safety moved into the mainstream, particularly after reports detailing how OpenAI and Anthropic raised concerns over xAI’s safety culture highlighted growing tension among leading AI labs. Expectations changed, conversations matured, and the future of AI began to focus less on spectacle and more on sustainability, responsibility, and long-term viability.

How the Year Started

The opening months of 2025 carried forward the momentum of previous years, with artificial intelligence still framed as a defining force of the decade. Product announcements, funding rounds, and model updates dominated headlines, reinforcing the idea that scale and speed were the primary measures of success. Many organizations entered the year with aggressive roadmaps, expecting continuous gains from larger models and broader deployment across consumer and enterprise use cases.

At the same time, early signals hinted at a shift beneath the surface. Rising compute costs, growing energy demands, and slower-than-expected returns on investment began influencing internal discussions. While optimism remained high, the tone subtly changed. Instead of asking how fast systems could grow, decision-makers started asking how stable, reliable, and economically sound that growth would be over the long term.

Build, Baby, Build

As 2025 progressed, the industry doubled down on construction, of data centers, infrastructure pipelines, and internal tooling. Massive investments flowed into hardware, cloud capacity, and specialized chips, driven by the belief that stronger foundations would unlock the next wave of capability. Expansion became a priority, with companies racing to secure resources before competitors could.

However, this phase also revealed the strain of constant expansion. Scaling required coordination across supply chains, regulatory environments, and operational teams, exposing friction that rapid innovation had previously masked. Building at this level demanded patience, discipline, and long-term planning. The year made one thing clear: growth was no longer just about ambition, it required endurance, balance, and a clear understanding of limits.

The Expectation Reset

By the time 2025 arrived, expectations around artificial intelligence had shifted in a noticeable way. The excitement that once surrounded every new model release gave way to more practical questions about cost, reliability, and long-term value. Businesses and users alike began evaluating AI systems based on real outcomes rather than promises, especially as operating expenses and infrastructure demands became harder to ignore. The focus moved toward whether these systems could consistently perform in everyday scenarios without excessive overhead.

This reset was also influenced by growing legal and competitive pressure within the AI ecosystem. As major players expanded their reach, concerns around market control and fair competition became part of the conversation. That tension surfaced clearly when xAI filed an antitrust lawsuit against Apple and OpenAI, bringing attention to how tightly AI development, distribution, and platform access had become intertwined. The lawsuit reflected a broader realization that technological progress alone was no longer enough; governance, transparency, and competitive balance now played an equally important role.

As a result, the AI industry entered a more measured phase. Ambition remained strong, but it was paired with realism. Expectations settled into a space where progress was still valued, yet judged through the lens of sustainability, accountability, and long-term impact rather than hype alone.

From Model Breakthroughs to Business Models

A striking theme in 2025 was the shift from celebrating isolated breakthroughs toward embedding AI in products with clear paths to revenue. No longer were companies satisfied with impressive demos alone; progress had to be tied to viable business outcomes. In enterprise contexts, a large language model functioning as a feature felt less compelling than one that could justify its cost through measurable productivity gains.

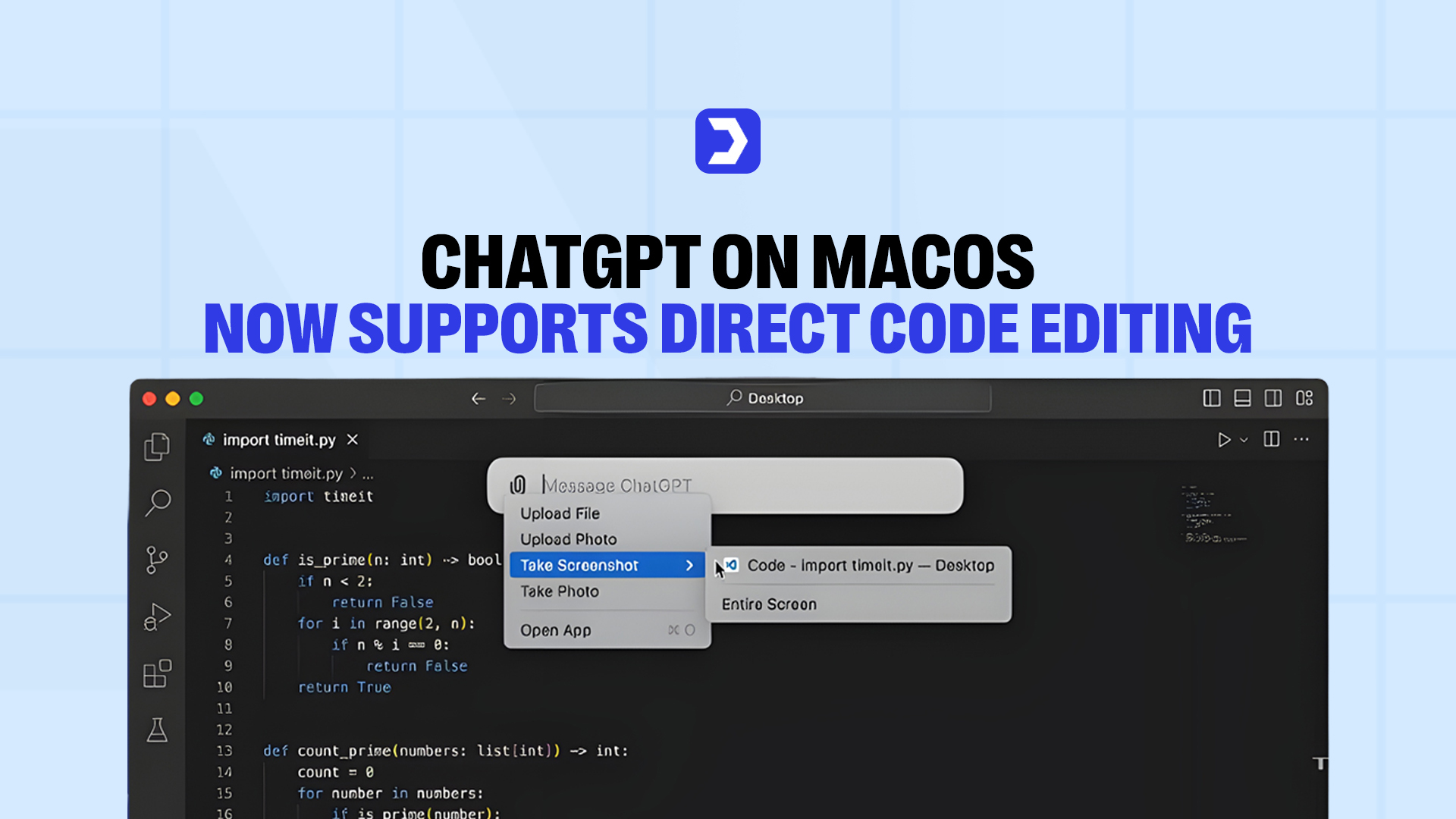

This transformation is visible in how ChatGPT’s integrations with major services like Spotify, Figma, and Canva changed workflows. Instead of users marveling at standalone generative outputs, they began relying on deeper integration points that enhance everyday productivity and creativity, showcasing that utility in context often outweighs raw novelty.

At the same time, companies wrestled with the economics of AI adoption. OpenAI’s current valuation and the financial expectations of backers remained under scrutiny, and rivals such as DeepMind adjusted strategy through cost optimization and tighter enterprise tooling. Meanwhile, Google balanced its vast compute investments with portfolio diversification, and xAI sought sustainable revenue channels beyond pure research.

The Trust and Safety Vibe Check

In 2025, conversations around trust and safety moved from abstract ideals to operational imperatives. The era of untested models being released with minimal oversight gave way to an era where reliability and user protection were treated as core engineering goals. As adoption grew across industries, issues that once seemed like edge cases surfaced in daily use, prompting deeper attention to robustness and error handling in deployed systems.

Even widely used systems powered by ChatGPT had to contend with edge-case behavior that affected real users, and one notable example was when a long-standing em dash formatting issue was identified and corrected, underscoring the importance of subtle yet impactful bugs being eliminated at scale. The resolution of this issue was not merely cosmetic; it reinforced the point that trust and safety engineering has become an ongoing, iterative commitment, one that determines whether AI enhancements feel dependable or brittle in real workflows.

Institutional trust also shaped how regulatory bodies and enterprise customers approached generative systems. Questions about data governance, consent, API misuse, and harmful outputs shaped procurement decisions and slowed some deployments until confidence in safeguards could match ambition. In essence, 2025 forced the industry to treat safety as a foundational metric on par with accuracy and latency, rather than a box to check after launch.

What Lies Ahead

If 2025 was the year of adjustment, then 2026 is shaping up as the year of integration. The broader AI ecosystem is ready to move past piecemeal capability releases and toward coherent offerings that work reliably inside existing infrastructures. Conversations now center on long-term maintenance, predictable performance, and bridging gaps between research ideals and interaction design realities.

At the same time, an increasing number of AI systems are moving into regulated industry contexts, healthcare, finance, enterprise software, and public sector services, where reliability isn’t optional. These environments demand clarity on data provenance, model behavior under edge conditions, and support commitments that reflect mission-critical stakes. Within this shifting context, platforms built by Digital Software Labs and similar innovators can play a role in making AI tangible and dependable for products that users engage with daily.

Strategic roadmaps are now prioritizing operational sustainability over feature count. The next wave of AI adoption won’t be driven by grand claims of novel algorithms alone but by proven solutions that serve stable, measurable value across use cases. As the dust settles on the “vibe check” of 2025, the broader industry is poised to refine what success looks like, and how applied intelligence can be woven into the fabric of everyday technology.